In the beginning of last month (April) Google announced the release of a large scale update to its search algorithm which took place in late February. This particular update, which is referred to in the webmaster community as “Panda 3.3”, has been considered an extension of previous updates which have taken place over the last year (“Panda” through “Panda 3.3”) but are unlike those which are related to content, identifying the originators of content, and brands – what Google Panda was really all about. This is likely why this latest tweak has been given a brand new squeaky clean name: “Penguin”, because it’s not about content anymore, no, it’s clearly about links.

The Google Penguin update was announced by Google’s Matt Cutts, on Tuesday, April 24th, 2012. Cutts stated on the Official blog for Google titled “Another step to reward high-quality sites” the following:

“In the next few days, we’re launching an important algorithm change targeted at web-spam. The change will decrease rankings for sites that we believe are violating Google’s existing quality guidelines. We’ve always targeted web-spam in our rankings, and this algorithm represents another improvement in our efforts to reduce webspam and promote high quality content.”

Basically, by April 24th, 2012, Google was finally prepared to release a significant update which they had obviously been testing for some time, and saw, with full proof readiness, that they could finally go after all those link buyers and launch an attack on sites that had been getting away with murder – those dirty rats that have been manipulating their search results through anchor text links for years.

Now, this was clearly nothing of a new desire for Google, – they’ve long warned about buying links and anchor text manipulation, as well as have long stated to make sites for users and not search engines, but until now, they obviously weren’t confident enough in their methods of going after offending sites both ‘aggressively’ and ‘algorithmically’ without accidentally catching some innocent web sites in their net, or worse – creating an all too easy way for unscrupulous webmasters to sabotage their competitors. Nope, they’ve either perfected a method of catching the bad guys, without accidentally hurting the good, or they’ve just lost total interest in caring – and it’s likely the former of the two… (I know, I know, they’re evil sometimes, but not here).

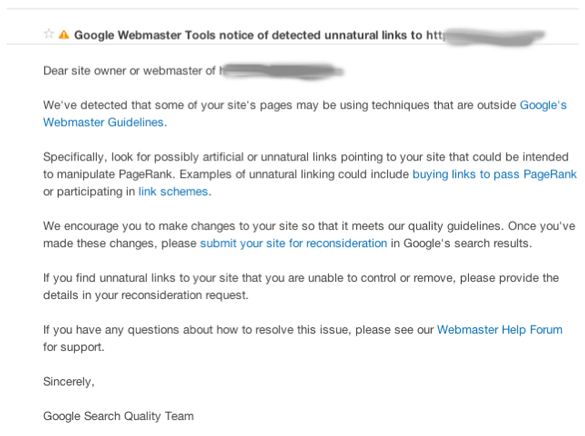

Below is what the message look like for the “Unnatural Links Penalty”

Here is how it all came to pass:

In late February, just about the time the first signs of a ‘link penalty’ or ‘over optimization’ penalty were noticed, Google released an update which specifically labeled a change to “Link evaluation”. The official Google Blog detailed in a post called Search quality highlights: 40 changes for February:

“We often use characteristics of links to help us figure out the topic of a linked page. We have changed the way in which we evaluate links; in particular, we are turning off a method of link analysis that we used for several years. We often re-architect or turn off parts of our scoring in order to keep our system maintainable, clean and understandable.”

This was the first signal that links were under attack in a new way and likely one of, if not THE, finishing touch to anchor text manipulation identification Google had long been working on, and it was time for an initial test. Now, understanding that with comprehending ‘Search’ comes some level of educated guesses, this is how they’ve “probably” gone about doing it, in its simplest explained form.

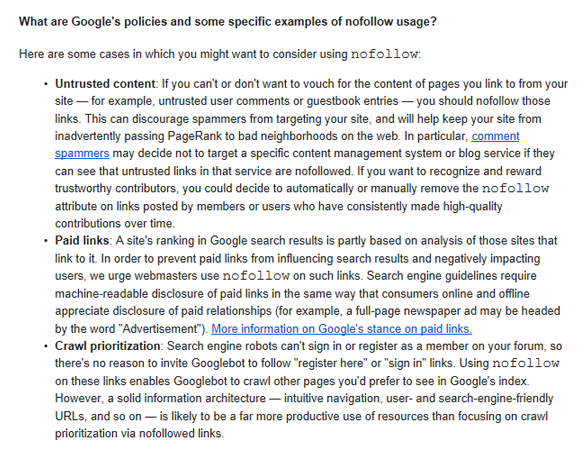

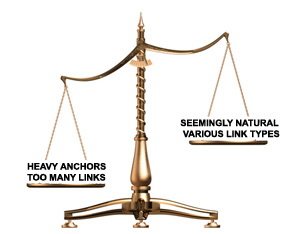

Taking into consideration that Google has been spending the last few years talking about “brands” (they’ve been working on this for years), as well as the fact that both good sites and bad sites buy links, there has to be a way to target link purchasers, specifically those who heavily abuse anchor text, without screwing up the entire index, because many great sites do buy links. And many great web sites don’t honor Google’s requests for the use of “nofollow” on paid ads or untrusted links, so it’s just not definitively reliable across the board(65% or more of the web isn’t listening to this stuff).

So, taking into consideration that link purchasing is fundamental to advertising and web site promotion, there is a need to ensure that efforts against offenders only penalize sites which ‘overdo it’, or links are the primary source of their rankings; not great sites steered by misguided marketers or sites which would rank well anyway.

Here is how you get it done.

Although this latest update does warrant its new name, Google Panda did help Google Penguin accomplish its mission: to substantiate the sites which shouldn’t be effected by mistake. You take everything you know about content, quality and the power of brands (what Panda successfully accomplished over the last year or so), and you use that to create a list of “too big to fails”. Those are the sites that are “unpenilizable” to some extent (yes , I made that word up) or sites that are clearly a dominate force in their niche. You move those sites to a group of sites competitors can’t take down, or are safe to not get shuffled on the implementation of the link assault dubbed “Penguin”. Then, you take the rest of the sites you can afford to shuffle around, and you release search engine havoc with Penguin.

What you wind up with is:

- Big brands stay where they are (or move up) regardless of what is happening in search.

- Little junk sites or sites that aren’t good, those which survived solely on the manipulation of anchor text, get the boot.

- Everyone else, specifically those not doing any link building at all, wind up on the bottom of page 1, page 2, 3 or 4 (a lot higher than they used to be as link hoarders are gone).

Google also begins selling lots more pay-per-click advertising because everyone losing out on all that free traffic panics, begins regretting all of those link buying tactics, and they start spending boat loads of money on PPC (instead of links) to regain their traffic that they’re not too confident they’ll get back any time soon(the hidden added benefit for the ole’ GOOG).

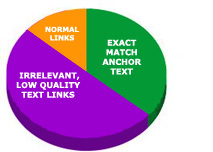

This whole thing happens when a web sites’ back link profile becomes over-weighted with low quality irrelevant links. Why doesn’t it happen right away, or why doesn’t it effect all sites that purchase links? There is a science behind that too, built and categorized all within Panda. A good site has a fairly healthy and diversified back link profile on its own and holds ‘brand value’.

Sites are only effected when two things take place:

- Anchor text is identified as being unnatural where the ‘exact anchor text’ is over used and the entire back link profile shows characteristics of unnatural frequency of anchor text.

- The irrelevant, low quality links begin to out weight the links which appear relevant and natural. In other words, when a site has more garbage links than quality links, say 65% – 70%, the penalization hits.

Both these things need to take place for Google Penguin to become a disaster for you.

If both of these things do take place, there is a very good chance, Google will alter your sites rankings for good reason. Your site isn’t all the important to the web anyway (because your back link profile is very weak), and you’re out there spamming the net, so why not use you as an example?

Interestingly enough, Matt Cutts said in a Google+ post that in mid-April (April 18th) Google misclassified some sites as parked which led to them being hit by accident, and this was corrected shortly before his post. Matt Cutts is sort of like Google’s Official Webmaster PR person, having a lot to do with Google’s voice and face behind the algorithm and does lots of videos which help webmasters follow Google’s Webmaster Guidelines. He stated:

“I saw a recent post where several sites were asking about their search rankings. The short explanation is that it turns out that our classifier for parked domains was reading from a couple files which mistakenly were empty. As a result, we classified some sites as parked when they weren’t. I apologize for this; it looks like the issue is fixed now, and we’ll look into how to prevent this from happening again.

A user named Matt Byrne asked the following question, which Cutts did answer:

Thanks for the info. Can you tell us if there’s a way to check if we were affected? I’ve felt sand-boxed for the last couple weeks; was wondering if it was my integration of my twitter feed. Or do we just wait to see? Thanks!”

“This was a very recent issue, so if you’re seeing something from a week or more ago, that wouldn’t be it.”

This means if your site was hit a week or so prior to April 18th, your drop in rankings isn’t related to this bug, but related to the update released in late February. Alternatively, if your site was effected and it’s rankings improved or returned to where they were around April 18th or just prior, your site was one of the ones that were mistakenly hit, and you weren’t meant to be effected. In other words, if your sites’ traffic has returned to where it once was, or has improved, you’ve made it out of this mess, at least for now – you were just hit by accident.

Was it really a classifier for parked domains, or an algorithm update that went too far?

Whatever it was, the announcement for the release of “Google Penguin” came just after this happened on April 24, 2012. In my opinion, and again, with comprehending ‘Search’ comes some guessing, I suspect what took place with the initial big change in the end of February (the first big change), was actually a small scale test of “penguin” and had nothing to do with being a Panda 3.3. It was actually “Test Penguin”, which went fairly well aside from it going a little too far. The “domain classifier error” announced mid-April for parked domains possibly wasn’t entirely true and was some Google fluff to keep secret what must be safe guarded with the highest amount of secrecy – key decisions behind testing the algorithm live, and this was actually a “too powerful penguin” which was then tweaked, and released just recently.

About The Author: John Colascione is Chief Executive Officer of Internet Marketing Services Inc. He specializes in Website Monetization, is a Google AdWords Certified Professional, authored a ‘how to’ book called ”Mastering Your Website‘, and is a key player in several Internet related businesses through his search engine strategy brand Searchen Networks®

*** Here Is A List Of Some Of The Best Domain Name Resources Available ***

*** Here Is A List Of Some Of The Best Domain Name Resources Available ***

Pretty nice. I just stumbled upon your Penguin update and wished to say that I have truly agreed with your blog posts. In any case I will be subscribing to your rss feed and I hope you write again soon.

Thanks for your indpeth knowledge pertaining to all the updates. It’s comforting to know what to expect next and what the heck just happened. I found all your information to make perfect sense, Keep blogging.