Google’s new “Knowledge Graph“ has been one of the most discussed topics out of many new releases from Google as of recent . It may also be the reason, or at least, a contributing factor, to Bing entering into a closer relationship with Encyclopedia Britannica. Ever since Google announced Knowledge Graph, the new feature has been present on countless search pages and is often based around information taken from Wikipedia. Wikipedia appears on the front of Google pages quite often. For example, before the Knowledge Graph update, Wikipedia was found to be present on the top of Google search results about 50 percent of the time in informational searches.

Users are able to edit Wikipedia live which supplies it with a means to consistently update information. A wiki is a website which allows its users to add, modify, or delete its content via a web browser using a simplified markup language or a rich-text editor. Studies showed that when information on the Knowledge Graph didn’t match up with Wikipedia, Google was behind by two or more days. This means that searchers find the most relevant and new information about certain keywords about 50% of the time. In some instances, this delay can be more significant. If a sports star wins a championship, the graph might not show it until a few days afterwards.

The Webs best information sources need to stay relevant, factually accurate and up-to-date (fresh). While Wikipedia has long been criticized for its lack of authority and trust, the online encyclopedia is updated faster than most sources of information. Several studies have been done to assess the reliability of Wikipedia. An early study in the journal Nature said that in 2005, “Wikipedia scientific articles came close to the level of accuracy in Encyclopedia Britannica and had a similar rate of “serious errors” This makes it more attractive for users who want relevant results. What’s somewhat unusual about this situation is the fact that Google has long boasted about indexing speed.

In Google’s “Company Philosophy”, What we believe” section of their web site, they wrote a list of “Ten things we know to be true”. The number 3 items is “Fast is better than slow”, partially defined as “We keep speed in mind with each new product we release, whether it’s a mobile application or Google Chrome, a browser designed to be fast enough for the modern web. And we continue to work on making it all go even faster”.

Increasing indexing speed is quite a bit different than updating references, however. Search engines index pages in a number of ways, and the process is largely automated. Automation is perhaps the only way to increase the rate at which the graph feature is changed.

While some are upset with lag time being seen, Google cannot put speed over or before accuracy as doing so carries abundant risks. Errors or inaccurate information could make the graph less valuable as it does take time for the web community to find, repair and fix vandalism on Wikipedia (although usually editors catch changes quick). In 2003 a study was conducted by MIT Media Lab and IBM where in which they conducted a research study concluding that “vandalism is usually repaired extremely quickly—so quickly that most users will never see its effects”.

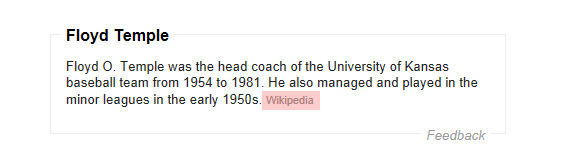

For this reason though, Google does allow users to report incorrect information by clicking on “Feedback“.

Note: Clicking on an item marked “Wrong?” will only alert Google to the inaccuracy of the item clicked, it will not allow users to write a message.

- So, how is Google doing all of this?

- Does Google use only Wikipedia or do they use other sources?

- Where did Google get the technology behind building-out these graphs?

A post from 2010 may answer these questions. On July 16th 2010, Google announced the acquisition of “Metaweb” with a post on their Official Google blog titled “Deeper understanding with Metaweb”. At the time Metaweb was described as “An entity graph of people, places and things, built by a community that loves open data” with a database of over 12 million things, including movies, books, TV shows, celebrities, locations, companies and more. If most of the technology behind Google’s new “Knowledge Graph” is in fact coming primarily from this acquisition, it took at least two years to implement into search.

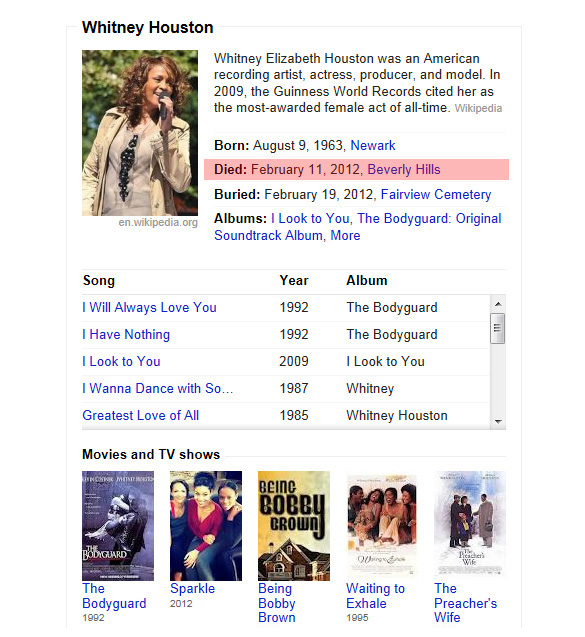

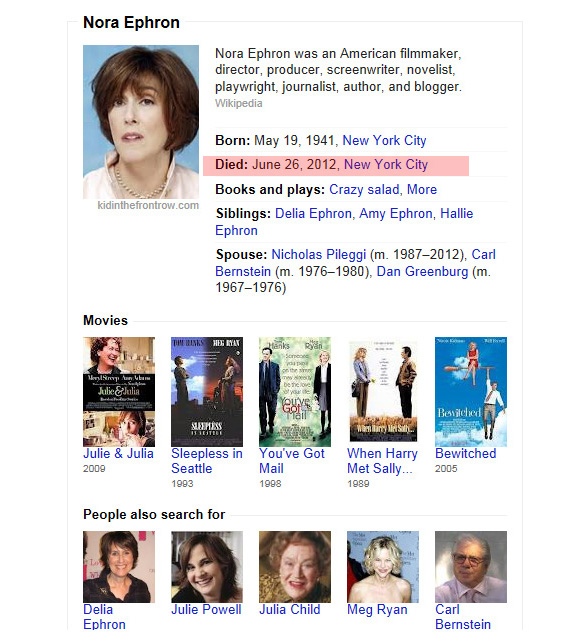

Still, one of the biggest problems with the graph’s lack of updates deals with how they reacts to recent news stories. For example, celebrity arrests and deaths are often searched very close to the time in which the events take place. While it might sound a little morbid, many users search for celebrities when they hear that the individual has died. Google Knowledge Graph results, if delayed by even just two or three days, would suggest that someone is alive when they are actually deceased (there is an example of this at the end of the post – 7/15/2012).

Below are some knowledge graphs which appeared delayed with updates as to the individuals recent death with some as far as four (4) days off, but most knowledge graphs checked were updated and fresh, particularly the most notable individuals with lengthy and complete graphs. (post update: found notable celebrity with at least 2 day delay – 7/15/2012)

Old forum posts, for instance, could also be an additional hurdle. Posts on bulletin boards, communities and web forms sometimes outrank sites with superior information. However, developers say that this shows Google has the wrong idea about content. Natural links are starting to vanish, according to some sources, and Google hasn’t adapted to the idea that some legitimate sites use techniques that they consider to be illegitimate.

Freshness isn’t always an indicator of quality, either. Many have publicly pointed out that Google’s most recent results keywords return content from many of the same sources. Google did seem to compromise a bit of quality when they rolled out Penguin as the intent was to target sites that were aggressively building links through unnatural means. Trouble is, some of those sites that disappeared were actually good results for searchers and shouldn’t have disappeared from the web as some users are being left with worse results. It seems Google puts quality first and foremost unless they want to shoot someone in the foot for not following the

rules.

All in all, “Knowledge Graph” is a great addition to Google.com, and while they cannot put speed over accuracy, it’s likely that Google will continue to find ways to update knowledge graph quicker while not compromising quality in the process.

Update: 7/15/2012

Found: Two day lag-time on Knowledge Graph for breaking news of celebrity death.

About The Author: John Colascione is Chief Executive Officer of Internet Marketing Services Inc. He specializes in Website Monetization, is a Google AdWords Certified Professional, authored a ‘how to’ book called ”Mastering Your Website‘, and is a key player in several Internet related businesses through his search engine strategy brand Searchen Networks®

*** Here Is A List Of Some Of The Best Domain Name Resources Available ***

*** Here Is A List Of Some Of The Best Domain Name Resources Available ***

Leave a Reply